Torch.cuda.empty_Cache() How To Use . Pytorch caches intermediate results to speed up computations. This function will free up all unused cuda memory. here are several methods you can employ to liberate gpu memory in your pytorch code: emptying the pytorch cache (torch.cuda.empty_cache()): here are several methods to clear cuda memory in pytorch: Fixed function name) will release all the gpu memory cache that can be freed. you need to apply gc.collect() before torch.cuda.empty_cache() i also pull the model to cpu and then delete that model and. for i, left in enumerate(dataloader): Empty_cache ( ) [source] ¶ release all unoccupied cached memory currently held.

from www.vrogue.co

for i, left in enumerate(dataloader): This function will free up all unused cuda memory. Fixed function name) will release all the gpu memory cache that can be freed. Empty_cache ( ) [source] ¶ release all unoccupied cached memory currently held. you need to apply gc.collect() before torch.cuda.empty_cache() i also pull the model to cpu and then delete that model and. emptying the pytorch cache (torch.cuda.empty_cache()): here are several methods you can employ to liberate gpu memory in your pytorch code: here are several methods to clear cuda memory in pytorch: Pytorch caches intermediate results to speed up computations.

How To Clear The Cuda Memory In Pytorch Surfactants vrogue.co

Torch.cuda.empty_Cache() How To Use Pytorch caches intermediate results to speed up computations. Fixed function name) will release all the gpu memory cache that can be freed. for i, left in enumerate(dataloader): Empty_cache ( ) [source] ¶ release all unoccupied cached memory currently held. emptying the pytorch cache (torch.cuda.empty_cache()): Pytorch caches intermediate results to speed up computations. This function will free up all unused cuda memory. you need to apply gc.collect() before torch.cuda.empty_cache() i also pull the model to cpu and then delete that model and. here are several methods to clear cuda memory in pytorch: here are several methods you can employ to liberate gpu memory in your pytorch code:

From discuss.pytorch.org

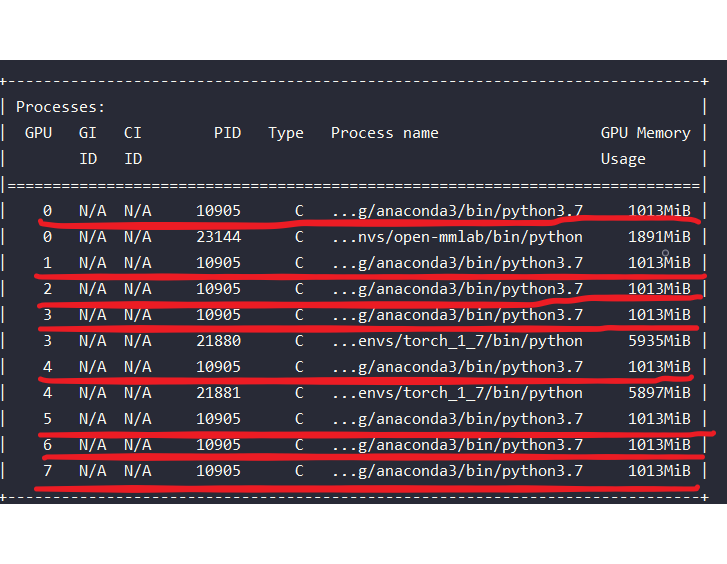

CUDA memory not released by torch.cuda.empty_cache() distributed Torch.cuda.empty_Cache() How To Use This function will free up all unused cuda memory. for i, left in enumerate(dataloader): here are several methods you can employ to liberate gpu memory in your pytorch code: you need to apply gc.collect() before torch.cuda.empty_cache() i also pull the model to cpu and then delete that model and. emptying the pytorch cache (torch.cuda.empty_cache()): Pytorch caches. Torch.cuda.empty_Cache() How To Use.

From dxozleblt.blob.core.windows.net

Torch.cuda.empty_Cache() Slow at Amanda Glover blog Torch.cuda.empty_Cache() How To Use Pytorch caches intermediate results to speed up computations. Empty_cache ( ) [source] ¶ release all unoccupied cached memory currently held. here are several methods to clear cuda memory in pytorch: emptying the pytorch cache (torch.cuda.empty_cache()): you need to apply gc.collect() before torch.cuda.empty_cache() i also pull the model to cpu and then delete that model and. This function. Torch.cuda.empty_Cache() How To Use.

From zhuanlan.zhihu.com

out of memory 多用del 某张量, 偶尔用torch.cuda.empty_cache() 知乎 Torch.cuda.empty_Cache() How To Use emptying the pytorch cache (torch.cuda.empty_cache()): Pytorch caches intermediate results to speed up computations. This function will free up all unused cuda memory. for i, left in enumerate(dataloader): here are several methods you can employ to liberate gpu memory in your pytorch code: Fixed function name) will release all the gpu memory cache that can be freed. Empty_cache. Torch.cuda.empty_Cache() How To Use.

From dxozleblt.blob.core.windows.net

Torch.cuda.empty_Cache() Slow at Amanda Glover blog Torch.cuda.empty_Cache() How To Use for i, left in enumerate(dataloader): you need to apply gc.collect() before torch.cuda.empty_cache() i also pull the model to cpu and then delete that model and. This function will free up all unused cuda memory. Pytorch caches intermediate results to speed up computations. emptying the pytorch cache (torch.cuda.empty_cache()): here are several methods to clear cuda memory in. Torch.cuda.empty_Cache() How To Use.

From github.com

Use torch.cuda.empty_cache() in each iteration for large speedup and Torch.cuda.empty_Cache() How To Use Fixed function name) will release all the gpu memory cache that can be freed. for i, left in enumerate(dataloader): here are several methods you can employ to liberate gpu memory in your pytorch code: you need to apply gc.collect() before torch.cuda.empty_cache() i also pull the model to cpu and then delete that model and. Empty_cache ( ). Torch.cuda.empty_Cache() How To Use.

From github.com

torch.cuda.empty_cache() is not working · Issue 86449 · pytorch Torch.cuda.empty_Cache() How To Use Empty_cache ( ) [source] ¶ release all unoccupied cached memory currently held. you need to apply gc.collect() before torch.cuda.empty_cache() i also pull the model to cpu and then delete that model and. Pytorch caches intermediate results to speed up computations. Fixed function name) will release all the gpu memory cache that can be freed. here are several methods. Torch.cuda.empty_Cache() How To Use.

From discuss.pytorch.org

How do I build this file to be included? (torch_cuda_cu.dll) PyTorch Torch.cuda.empty_Cache() How To Use you need to apply gc.collect() before torch.cuda.empty_cache() i also pull the model to cpu and then delete that model and. Pytorch caches intermediate results to speed up computations. Fixed function name) will release all the gpu memory cache that can be freed. for i, left in enumerate(dataloader): Empty_cache ( ) [source] ¶ release all unoccupied cached memory currently. Torch.cuda.empty_Cache() How To Use.

From blog.csdn.net

【2023最新方案】安装CUDA,cuDNN,Pytorch GPU版并解决torch.cuda.is_available()返回false等 Torch.cuda.empty_Cache() How To Use emptying the pytorch cache (torch.cuda.empty_cache()): This function will free up all unused cuda memory. Pytorch caches intermediate results to speed up computations. here are several methods to clear cuda memory in pytorch: here are several methods you can employ to liberate gpu memory in your pytorch code: for i, left in enumerate(dataloader): you need to. Torch.cuda.empty_Cache() How To Use.

From discuss.pytorch.org

Unable to clear CUDA cache nlp PyTorch Forums Torch.cuda.empty_Cache() How To Use Empty_cache ( ) [source] ¶ release all unoccupied cached memory currently held. here are several methods you can employ to liberate gpu memory in your pytorch code: Pytorch caches intermediate results to speed up computations. you need to apply gc.collect() before torch.cuda.empty_cache() i also pull the model to cpu and then delete that model and. This function will. Torch.cuda.empty_Cache() How To Use.

From discuss.pytorch.org

PyTorch + Multiprocessing = CUDA out of memory PyTorch Forums Torch.cuda.empty_Cache() How To Use emptying the pytorch cache (torch.cuda.empty_cache()): Pytorch caches intermediate results to speed up computations. you need to apply gc.collect() before torch.cuda.empty_cache() i also pull the model to cpu and then delete that model and. Empty_cache ( ) [source] ¶ release all unoccupied cached memory currently held. Fixed function name) will release all the gpu memory cache that can be. Torch.cuda.empty_Cache() How To Use.

From github.com

torch.cuda.empty_cache() write data to gpu0 · Issue 25752 · pytorch Torch.cuda.empty_Cache() How To Use emptying the pytorch cache (torch.cuda.empty_cache()): for i, left in enumerate(dataloader): here are several methods to clear cuda memory in pytorch: you need to apply gc.collect() before torch.cuda.empty_cache() i also pull the model to cpu and then delete that model and. Pytorch caches intermediate results to speed up computations. here are several methods you can employ. Torch.cuda.empty_Cache() How To Use.

From github.com

[Bug] web_demo中torch.cuda.empty_cache()不生效,显存一直上涨,几轮后重复回答问题 · Issue 90 Torch.cuda.empty_Cache() How To Use here are several methods to clear cuda memory in pytorch: Fixed function name) will release all the gpu memory cache that can be freed. here are several methods you can employ to liberate gpu memory in your pytorch code: Pytorch caches intermediate results to speed up computations. This function will free up all unused cuda memory. emptying. Torch.cuda.empty_Cache() How To Use.

From blog.csdn.net

在服务器上配置torch 基于gpu (torch.cuda.is_available()的解决方案)_服务器配置torch gpu环境CSDN博客 Torch.cuda.empty_Cache() How To Use Pytorch caches intermediate results to speed up computations. you need to apply gc.collect() before torch.cuda.empty_cache() i also pull the model to cpu and then delete that model and. Empty_cache ( ) [source] ¶ release all unoccupied cached memory currently held. This function will free up all unused cuda memory. here are several methods to clear cuda memory in. Torch.cuda.empty_Cache() How To Use.

From www.aiuai.cn

Pytorch 提升性能的几个技巧[转] AI备忘录 Torch.cuda.empty_Cache() How To Use here are several methods to clear cuda memory in pytorch: emptying the pytorch cache (torch.cuda.empty_cache()): Fixed function name) will release all the gpu memory cache that can be freed. you need to apply gc.collect() before torch.cuda.empty_cache() i also pull the model to cpu and then delete that model and. Pytorch caches intermediate results to speed up computations.. Torch.cuda.empty_Cache() How To Use.

From github.com

GPU memory does not clear with torch.cuda.empty_cache() · Issue 46602 Torch.cuda.empty_Cache() How To Use Empty_cache ( ) [source] ¶ release all unoccupied cached memory currently held. for i, left in enumerate(dataloader): Pytorch caches intermediate results to speed up computations. emptying the pytorch cache (torch.cuda.empty_cache()): here are several methods you can employ to liberate gpu memory in your pytorch code: Fixed function name) will release all the gpu memory cache that can. Torch.cuda.empty_Cache() How To Use.

From www.vrogue.co

How To Clear The Cuda Memory In Pytorch Surfactants vrogue.co Torch.cuda.empty_Cache() How To Use Fixed function name) will release all the gpu memory cache that can be freed. you need to apply gc.collect() before torch.cuda.empty_cache() i also pull the model to cpu and then delete that model and. Pytorch caches intermediate results to speed up computations. here are several methods you can employ to liberate gpu memory in your pytorch code: Empty_cache. Torch.cuda.empty_Cache() How To Use.

From stlplaces.com

How to Clear Cuda Memory In Python in 2024? Torch.cuda.empty_Cache() How To Use here are several methods you can employ to liberate gpu memory in your pytorch code: Empty_cache ( ) [source] ¶ release all unoccupied cached memory currently held. emptying the pytorch cache (torch.cuda.empty_cache()): Fixed function name) will release all the gpu memory cache that can be freed. here are several methods to clear cuda memory in pytorch: This. Torch.cuda.empty_Cache() How To Use.

From github.com

device_map='auto' causes memory to not be freed with torch.cuda.empty Torch.cuda.empty_Cache() How To Use emptying the pytorch cache (torch.cuda.empty_cache()): here are several methods you can employ to liberate gpu memory in your pytorch code: here are several methods to clear cuda memory in pytorch: you need to apply gc.collect() before torch.cuda.empty_cache() i also pull the model to cpu and then delete that model and. This function will free up all. Torch.cuda.empty_Cache() How To Use.